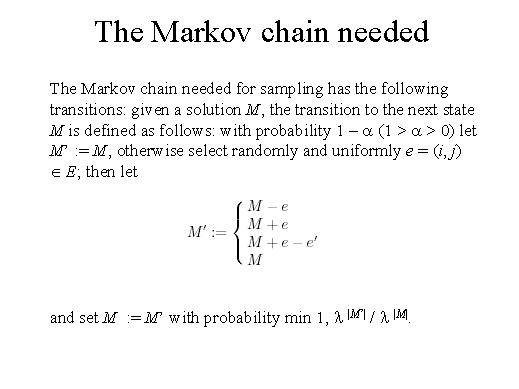

The Markov chain needed for sampling has the following transitions: given a solution M, the transition to the next state M is defined as follows: with probability 1 - a (1 > a > 0) let M' := M, otherwise select randomly and uniformly e = (i, j) Î E… and set M := M' with probability min 1, l |M'| / l |M|.