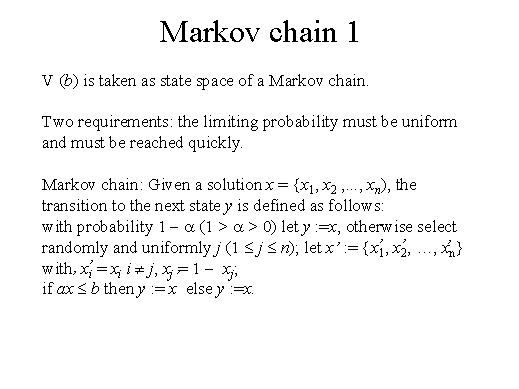

V (b) is taken as state space of a Markov chain. Two requirements:

the limiting probability must be uniform and must be reached quickly.

Markov chain: Given a solution x = {x1,

x2,..., xn),

the transition to the next state y is defined as follows: with

probability 1 - a (1 > a

> 0) let y := x ,otherwise select randomly

and uniformly j(1 £ j £

n); let x' := {x1,

x2,..., xn}

with

xi = xi

i ¹ j, xj=

1 - xj; if ax £

b then y := x else

y :=x.