The matrix P is called a homogeneous transition or stochastic

matrix because all the transition probabilities pij

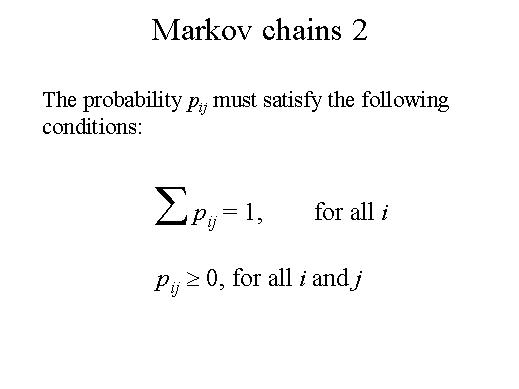

are fixed and independent of time. The probability pij

must satisfy the following conditions.

The Markov chain is now defined. A transition matrix P together with the

initial probabilities aj(0)

associated with the states Ej

completely define a Markov chain. One usually thinks of a Markov chain

as describing the transitional behaviour of a system over equal intervals.

Situations exist where the length of the interval depends on the characteristics

of the system and hence may not be equal. This case is referred to as

imbedded Markov chains.