The number of studies on the type of error faults in the software were

carried out on faults like IBM, which have developed a major software.

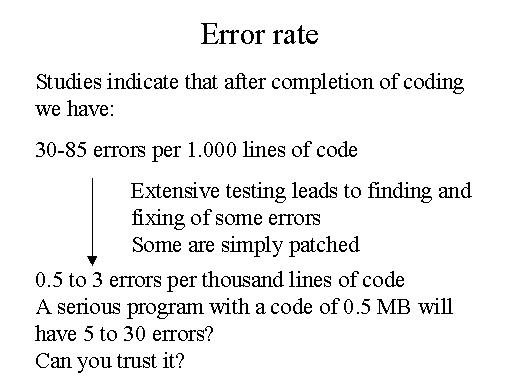

30-85 errors per 1.000 lines of code is what could be existing in the

beginning stages. This is a very large number. And after an extensive

testing that leaves to finding out the fixing of some of the errors and

faults, and the errors will drop down to almost 0.5 to 3 errors per thousand

lines of code.

Now, looking at the sort of errors, even after an extensive testing, by

major falls, it indicates 0.5 to 3 errors per thousand lines of code.

You could look to the number of patches that come to the windows operating

system, almost every day you can watch it on the web site patch that comes

out. This means, probably we have to coccect it almost every day.

What is the implication of it? 0.5 to 3 errors per thousand lines of code.

If you want any respectable code to any function today, it would easily

0.5 mega byte and above. A 0.5 mega byte will have about 5 to 13 falls

and errors. You think it is an acceptable level. Can you trust a software

to behave properly? And this is where the testing comes to the picture.