We deal with problems where we want to minimise over all the feasible solutions for a given instance I a given function f. We can relax these algorithms in the following way.

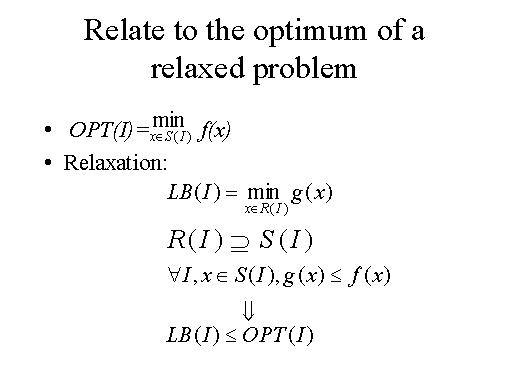

Rather then considering the set of feasible solution S(I), we consider a larger set of solutions that we denote by R(I). Then we define a different problem on a different objective function. We call this problem LB(I).

Rather then computing the optimum, we compute this value LB(I) that is the minimum over all the solutions in the new set of solutions R(I) of a function g(x). Function g(x) has the following properties: for every instance and every possible solution in the original set solution S(I), g(x) is less o equal than f(x).

From this we know that this function LB is smaller than the optimal solution. This is called a lower bound on the optimal solution.