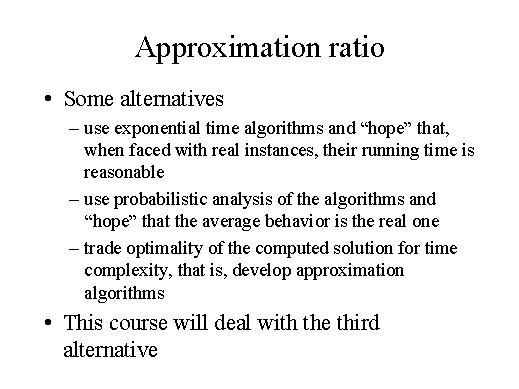

This is a feasible approach and in my opinion most people follow this approach. But you should realise that if you follow this approach it can happen that for some instances your algorithms will require years if not centuries.

An another approach is to try to analyse your algorithm from a nerrigh case point of view. Then you hope that the real distribution of the input instances is similar to the distribution you use to perform the probability analysis. Actually the problem in this case is that very often we do not know what is the probability distribution of the real instances.

Usually people assume that instances are distributed according to the uniform distribution. I am not sure that this is a realistic assumption.

A third alternative instead is to trait optimality of the computed solution for time complexity. This leads to the development of approximation algorithm, actually polynomial time approximation algorithm that is efficiently polynomial approximation algorithms.

This course will deal with these kind of alternatives. So the main gaol of this course is to give you some tech in order to design approximation algorithms.