For making the performance evaluation whatever data has to be collected is available within the system itself.

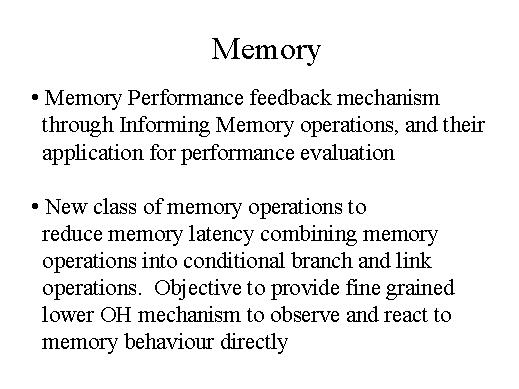

Memory and communication latency is common in such architectures. The ability of reducing the latency will improve the performance. It combines several operations and is able to handle fair grain programming situation particularly considering the FPGA implementation of the architectures. We have to react to the kind of the memory behaviour. There are two important observations based on alpha machines and R2000 and the case study of access control were examined. Cache coherence improved the performance but overhead is increased by 25 to 40 percent. So, it is debatable to have a higher overhead for improving the cache performance.

This nevertheless is became a new technique considering the memory system performance. Another technique of handling memory is through intensive heap allocation. This is studied in connection with a compiler on machines like DEC, HP 9000 etc. The performance was found to be good only on a DEC machine. All these performance evaluation exercises will have to go through standard programming benchmarking systems.

The advanced heap allocation systems have not given much intelligent results. The debatable issue is whether we have a good simulation environment. This also needs to be studied in detail and it is another open area of research. Another simulation environment conceived was parallel-simulated virtual machine.

We know that nowadays the kind of computing environment is heterogeneous. There are tools for performance evaluation, such as Tango, Speedy, etc., but they are non-integrated development environments for carrying out the simulation. In this particular case, the general prediction that is made to handle programming is found to be good.